Unveiling the Power of Knowledge Graphs: A Hallucination-Free LLM Experience

By: Keith Copeland

In recent years, the advent of Large Language Models (LLMs) has revolutionized the field of natural language processing. These models, like GPT-3 and GPT-4, have demonstrated an incredible ability to generate human-like text, making them invaluable tools for a variety of applications ranging from chatbots to content creation. However, despite their impressive capabilities, LLMs are not without their flaws. One of the most significant issues plaguing these models is the phenomenon of "hallucination," where the model generates information that is factually incorrect or nonsensical. This can be particularly problematic in applications where accuracy and reliability are paramount.

Enter Knowledge Graphs (KGs) and ontologies. These structured representations of knowledge offer a promising solution to the hallucination problem by providing a grounded, verifiable source of information that LLMs can reference. In this blog, we'll explore how integrating Knowledge Graphs with LLMs can create a more reliable, hallucination-free experience.

The Hallucination Challenge in LLMs

Large Language Models, such as GPT-4 and similar architectures, have demonstrated remarkable capabilities in natural language understanding and generation. However, one significant drawback is their tendency to produce "hallucinations"—responses that are factually inaccurate or nonsensical. This issue arises because LLMs generate text based on patterns learned from vast datasets, which may not always be grounded in factual or contextually appropriate information.

This can happen for several reasons:

Lack of Context: LLMs generate responses based on patterns in the data they were trained on, which may not always provide the necessary context for accurate answers.

Data Limitations: The training data may be incomplete or biased, leading to gaps in the model's knowledge.

Overgeneralization: LLMs may overgeneralize from the data, creating connections that don't actually exist.

For businesses relying on AI for critical decision-making, these hallucinations can lead to costly errors and undermine trust in AI systems.

What Are Knowledge Graphs?

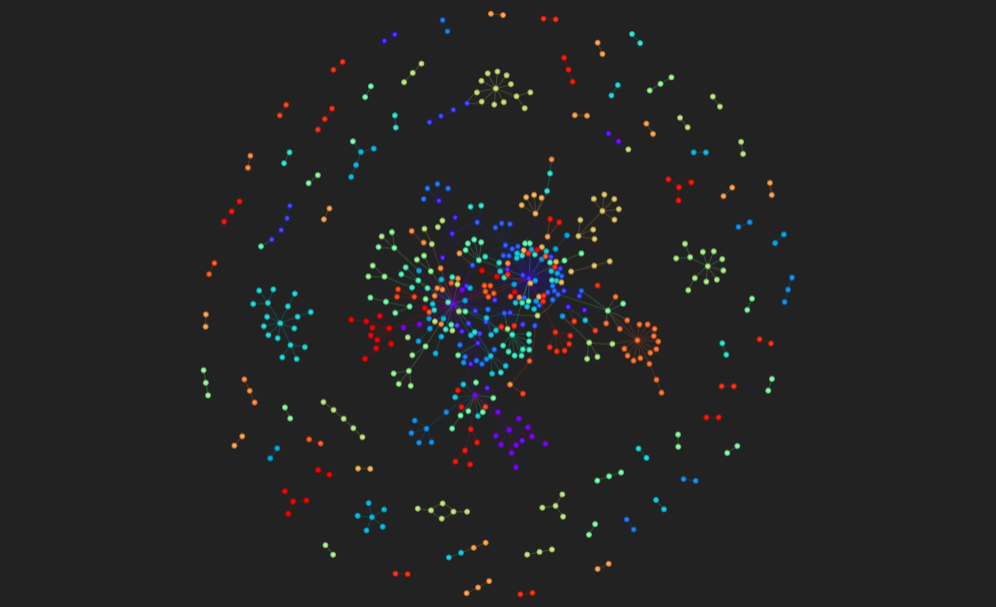

Example: Knowledge Graph created from query response with documents as colours with interconnected knowledge represented

A Knowledge Graph is a structured representation of knowledge in the form of entities (such as people, places, and concepts) and the relationships between them. These graphs are built using ontologies, which define the classes, properties, and relationships that make up the domain of knowledge. For example, in a medical ontology, entities might include "Doctor," "Patient," and "Disease," with relationships like "treats" and "suffers from."

Knowledge Graphs are particularly valuable because they provide a way to represent complex, interconnected information in a manner that is both human-readable and machine-interpretable. This makes them an excellent tool for applications that require a deep understanding of the relationships between different pieces of information.

Knowledge Graphs offer a structured, reliable source of information that can be used to ground the responses generated by LLMs. Here are some ways in which KGs can mitigate hallucinations:

Contextual Grounding: By referencing a Knowledge Graph, an LLM can provide responses that are grounded in verified information. This ensures that the generated text is not only contextually relevant but also factually accurate.

Enhanced Reasoning: Knowledge Graphs enable more sophisticated reasoning capabilities. For instance, an LLM can infer new information based on the relationships in the graph, providing more comprehensive and accurate answers.

Verification and Validation: Outputs generated by LLMs can be cross-referenced with the Knowledge Graph to verify their accuracy. Any inconsistencies can be flagged and corrected, ensuring the reliability of the information.

The Role of Ontologies in Enhancing LLMs

Ontologies play a crucial role in the integration of knowledge graphs with LLMs. They define the classes, properties, and relationships within a domain, providing a semantic framework that LLMs can use to interpret and organize information. By prompting LLMs with relevant ontologies, we can transform unstructured text into well-formed knowledge graphs, which can then be used to populate graph stores or further train the models.

This approach offers several advantages. Firstly, it ensures that the generated knowledge graph is semantically consistent with the domain-specific ontology, reducing the likelihood of hallucinations. Secondly, it enables more sophisticated querying and reasoning capabilities, as the structured data can be easily navigated and analyzed. Lastly, it enhances the interpretability and trustworthiness of AI systems, as users can verify the accuracy of responses against the underlying knowledge graph.

Practical Applications and Benefits

The integration of Knowledge Graphs and LLMs can be applied in various domains to enhance the reliability and accuracy of AI-driven solutions:

Healthcare: In medical applications, Knowledge Graphs can be used to integrate and analyze vast amounts of clinical data, providing accurate and contextually relevant information to healthcare professionals.

Finance: Financial institutions can leverage Knowledge Graphs to ensure the accuracy of automated reports and analyses, reducing the risk of errors that could have significant financial implications.

Legal Services: Legal professionals can use Knowledge Graphs to verify the accuracy of information in legal documents and ensure that all references are grounded in verified legal knowledge.

By leveraging knowledge graphs, businesses can unlock the full potential of LLMs, transforming them from powerful language models into reliable, context-aware AI systems. This not only enhances operational efficiency but also provides a competitive edge in an increasingly data-driven world.

Our Innovative Approach

At Rock River, we are developing a cutting-edge methodology that combines the statistical power of LLMs with the structured knowledge of graphs. This approach involves a planning-retrieval-reasoning framework that allows LLMs to access the latest knowledge while reasoning based on faithful plans on graphs. By distilling knowledge from knowledge graphs through custom algorithms, our method enhances the reasoning capability of LLMs and enables seamless integration with any LLM during inference.

Our preliminary experiments have shown promising results, demonstrating significant improvements in the accuracy and interpretability of LLM outputs. By continuously refining and updating the knowledge graph based on insights provided by the LLM, we create a bi-directional data flywheel that enhances both the knowledge graph and the LLM over time.

At Rock River Research, we are dedicated to advancing the capabilities of AI through innovative technologies like knowledge graphs. By addressing the hallucination challenge in LLMs, we are paving the way for more accurate, reliable, and contextually grounded AI systems. We invite businesses to join us in exploring the transformative potential of knowledge graphs and to start the conversation on how this technology can be tailored to meet their specific needs. Don't get left behind—embrace the future of AI with Rock River Research.

By integrating knowledge graphs into your AI strategy, you can ensure that your business stays ahead of the curve, leveraging the most advanced capabilities of AI to drive growth and innovation. Let's embark on this journey together and unlock the true potential of AI